Clouds of Knowledge

What do I hope to convince you with this note…

Public Cloud, Private cloud nomenclature is no longer valid (AI blurs the boundaries). Public knowledge vs Private Knowledge is more relevant.

Neoclouds are of many types and some are passing clouds, but they will look different before 2030. Will speculate on what is likely to happen.

Private knowledge clouds - the emergent white space

A quick pre-read Cloud_vs_fabs will get the context of this note.

AI has blurred the notion of Public vs Private clouds. There is a public cloud as in AWS, GCP and Azure. There is a private cloud (largely OEM enabled private data centers). And there have been Tier-2 clouds or perhaps Alternative Clouds. The emergence of ‘Neocloud’, perhaps made ubiquitous by Semianalysis, needs better explanation and classification. Before that

I asked Perplexity, and it said “A neocloud is a new category of cloud provider purpose-built for artificial intelligence (AI), machine learning (ML), and high-performance computing (HPC) workloads. Unlike traditional hyperscalers such as AWS, Azure, or Google Cloud, which offer broad general-purpose services, neoclouds specialize almost entirely in GPU-as-a-Service (GPUaaS) or AI-as-a-Service (AIaaS) infrastructure optimized for demanding, massively parallel computational tasks”. Equinix defines it as “one that’s purpose-built for AI and complementary to traditional cloud offerings”.

Well, everybody is going AI-first. Everybody needs a GPU for some LLM compute. The patterns that are more emergent are along the lines of knowledge than pure GPUaaS or “AI-first” as the latter terms (GPUaaS and AI-first) are not a sustainable value proposition and do not reflect what is emergent. Further, when you consider many silicon providers who have been swimming upstream (Cerebras, Groq, Sambanova to name a few) have ‘Cloud’ as an offering in addition to or in lieu of a chip or a system as they face challenges in selling their tech. Hence I suggest a new nomenclature along ‘Knowledge’. Public vs Private was rather well defined for an elastic compute service made available for anybody to consume (Public) vs specific to a customer/enterprise (Private). But we are entering into an era of Knowledge services and that is tied to how data in part and types of knowledge service. So, I hope the term knowledge is more reflective of the way forward.

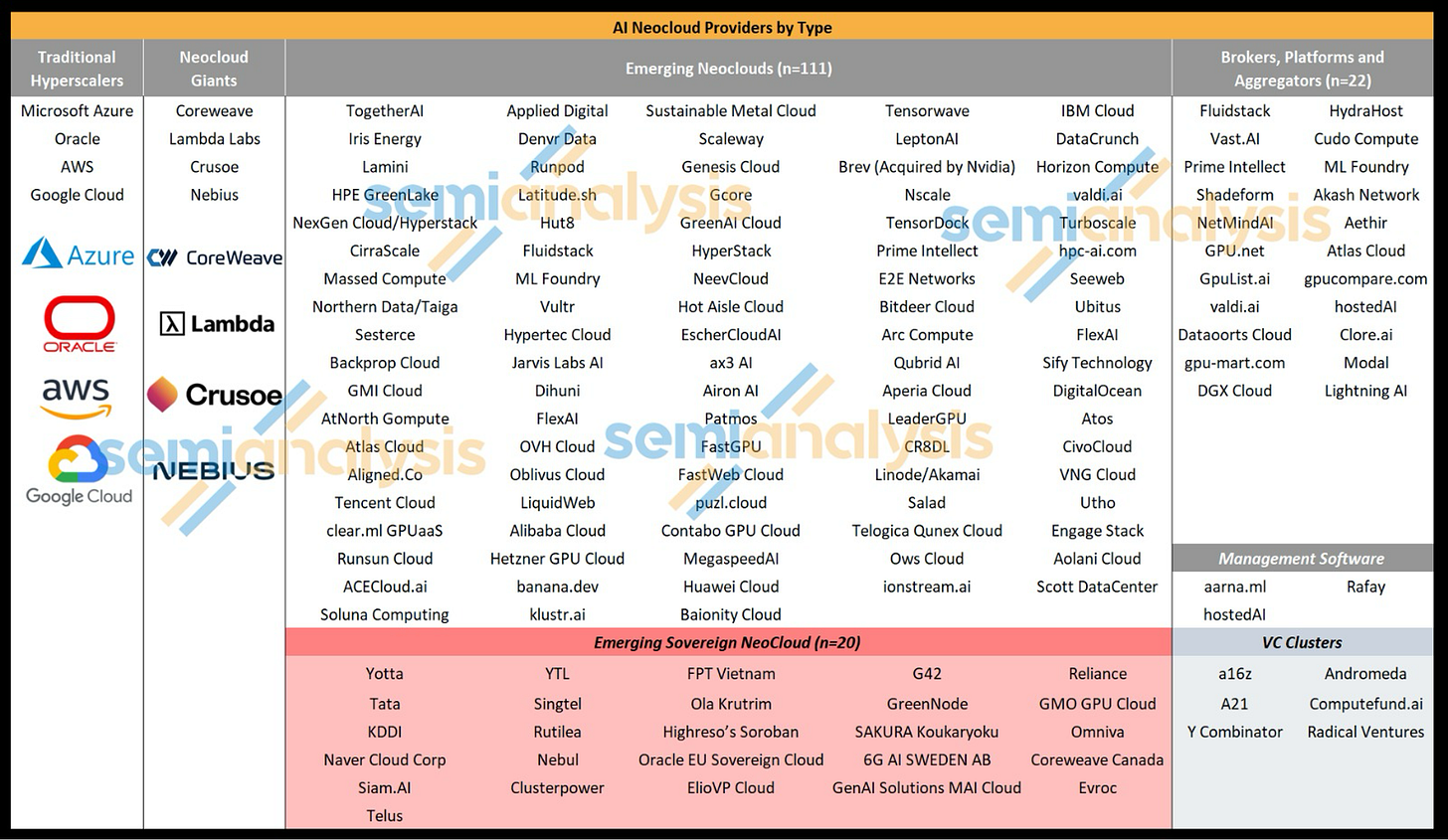

Before we get into knowledge, let us onion peel the Neocloud grouping. As represented by Semianalysis, there are approx 200 of them worldwide and it’s shown in this visual.

Source:semianalyses

But as you onion peel this group, there are 4 if not more types of offerings within this Neocloud definition.

Clearly it’s a smorgasbord of companies ranging from GPUaaS to ‘AI-as-a-service’ to AI application-as-a-service. Some of the AI-as-a-service players (e.g together.AI or fireworks.ai and OpenAI use their owned cloud, at times a rented neocloud as well as the public cloud). Clearly that points to the issue that AI-compute is everywhere and depending on the business model, needs and other factors, they offer their service to end users. So a broad ‘Neocloud’ is hard to be precise and it is going to be ephemeral (like in the dot.com days - recall exodus and rackable, rackspace etc) i.e. the pure GPUaaS will morph over time.

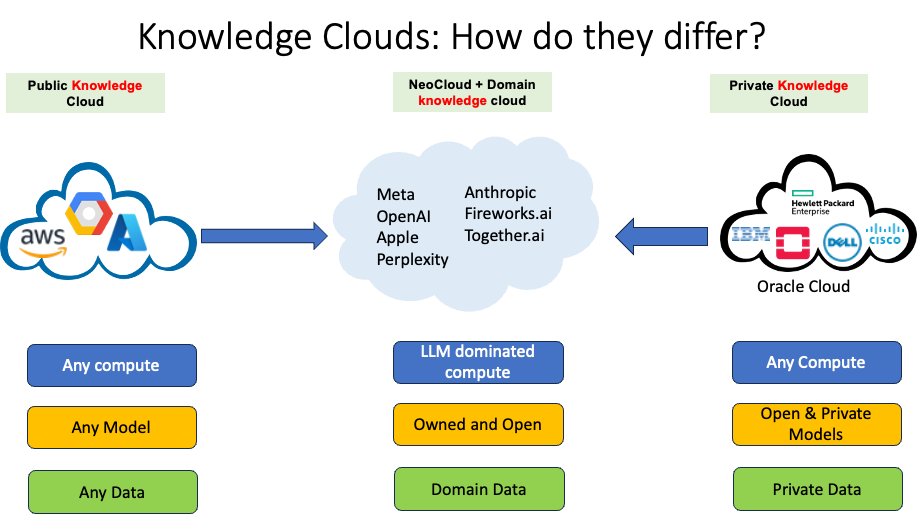

To better contrast the type of compute, the data and the end AI application, I would suggest ‘knowledge’ as a term. AI (via LLMs) brings knowledge to the forefront. Specifically extracting intelligence out of data and representing it as knowledge. Knowledge as we know exists in 3 (largely) basic sources. Public Knowledge (everybody has equal access), Private Knowledge (private or protected) and Domain Knowledge (domain expertise).

Domain specific or shared knowledge for a license include coding cloud (Claude), lexis-nexis for legal database or GIS information that a subset of the public has a need or care about. In the prior era, it was the SaaS cloud - but SaaS unlike knowledge was primarily workflow automation and delivered as a SaaS application. I will use shared knowledge and domain specific knowledge interchangeably for this purpose of this blog as domain specific is more appropriate than the word shared.

In both public and private cloud, you have general purpose compute as well general AI compute (any LLM + all the bells and whistles of generalized compute). But, only open models are generally available for locally hosted in private clouds with closed models accessed via API gateways

Enter Neoclouds AND domain specific cloud, which are largely either a large domain user (Meta, Apple) or now emergent LLM based inference or applications (OpenAI, Claude, together.ai, fireworks.ai ).

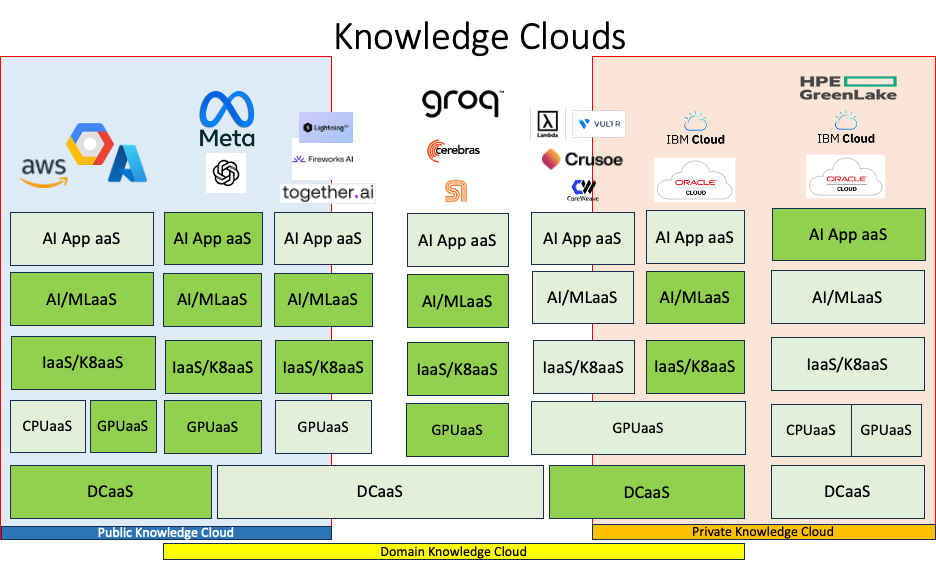

This is a complex visual. But there are 4 representations.

Public vs Domain vs Private Knowledge Clouds

The green boxes are owned or engineered. The light green boxes are either sourced from 3rd parties or rented.

Except for the left and right column, the middle 5 are variations of the Neoclouds as semianalysis had grouped them. I have tried a new classification here. To enumerate.

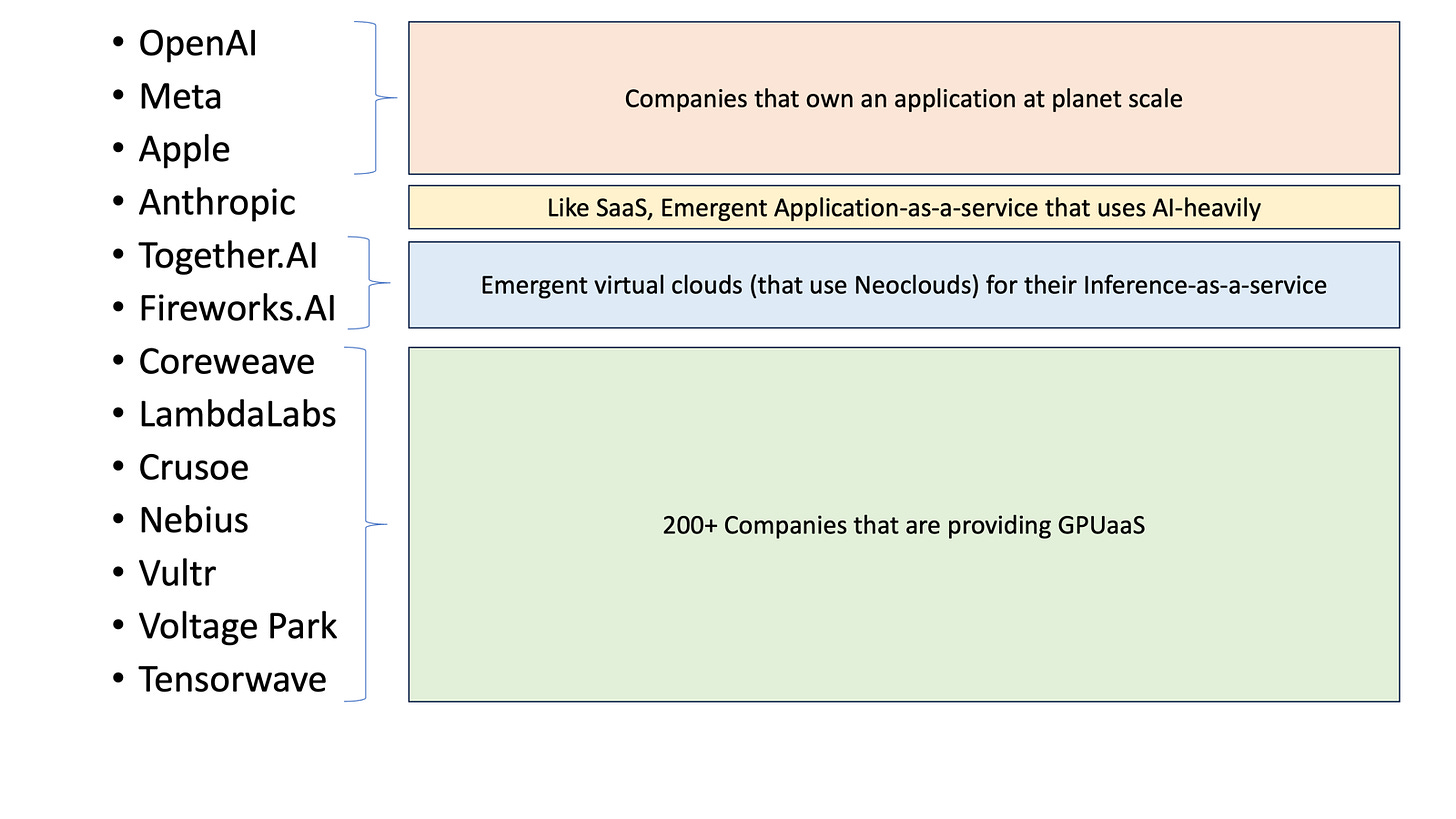

There are at least 5 types of Neoclouds (that will provide AIaaS in various forms)

AIaaS: OpenAI, Anthropic/Claude: Full app-as-a-service: Entire stack

LLMaaS: Together AI, fireworks.ai

GPUaaS: Coreweave/Lambda/Crusoe. Most Operational & financial engineering

Enterprise AIaaS: IBM, Oracle and other private data centers (like JPMC, Citizen)

xPUaaS: Groq, Cerebras,SambaNova: Provide their silicon as a differentiator via a cloud

Facebook, Apple and now OpenAI, Anthropic/Claude: These are players who have 1 or 2 applications at scale and have become dominant in that category. They don’t provide general purpose compute or compute as a utility. They serve a few apps that they own at scale. OpenAI has grand ambitions to do all things the world needs, but for now they are primarily GPTaaS (approaching a billion users). Dropbox and Uber have been in this category and remain successful within that niche.

Inference-as-service: Together.ai, Fireworks.ai and a plethora of such inference only clouds (as a starting point) have emerged. It’s unclear (quite unlikely) if they can remain there as achieving scale for Inference-as-a-service, while being opportunistic, might not be scaleable. Expect many of them to pivot or morph (add more breadth or depth capabilities). Expect them to get acquired or own an AIaaS over time, otherwise they get trapped into the law of excluded middle.

GPUaaS: There are 100+ companies and $40+B invested, not to mention the sovereign cloud investments in the middle east. As mentioned in prior blogs, it was propped by Nvidia initially to have an alternative supply chain for Nvidia and the overall market size growth is attracting financial investors to support them. Clearly the market will not sustain 100+ of these, but the consolidated few will not look like Public cloud or Private cloud as they will lack the engineering capability. My speculation is they will remain as an overflow or the AI-era version of Equinix and Coresight i.e. traditional CoLo providers where the complexity of assembling, managing and maintaining hardware is taken care of as the Private knowledge Cloud market grows or be a proxy for the silicon providers (which many are there for Nvidia today).

Silicon-as-a-service: These are folks like Groq, Sambanova and Cerebras. They are carrying a huge burden (2 x multi-$B investments )- one for silicon and the other AI software stack to be compatible or beat CUDA from an end-user perspective. Clearly these have not been accepted to date by the Private knowledge cloud AND Public Knowledge Cloud players (that tells you something about their value prop) despite all the performance, price and other claims. To survive, they will have to swim upstream (like Anthorpic / Claude) to own an application of value that leverages their silicon in a unique way (it’s hard). Google’s TPU has shown that there is not much to differentiate (at least for the transformer based neural networks).

Enterprise AI: Will discuss them as part of Private knowledge Cloud.

While the public knowledge cloud is a superset, the neoclouds will specialize over time to differentiate and the private knowledge will have private data and private compute, reasons related to regulatory, data sovereignty and data gravity.

Unlike the SaaS offerings, which were dominated by traditional Public Cloud providers who hosted them, we see a pattern where the domain specific AI service is both available in Public Clouds and Neoclouds, but not in private clouds (for now).

The domination of Public Cloud for all compute is not the case today - Why is that the case?

Handicapping Public Clouds:

Cost of GPUaaS: For many the public cloud is expensive and the software stack is evolving at a rapid pace that there is no clear leader i.e. to be dependent on Public Cloud. Also, Nvidia is both controlling the supply chain and elements of the stack (HW/SW) that provides emergent players to compete on cost and feature set.

CUDA_vs_rest: There is a massive challenge to keep up the evolution of the model space and the system level stack. CUDA is the benchmark and ROCm and Google JAX have reached escape velocity with developers - others are and will be challenged. Here’s a high level summary of the software stack that one has to build to be competitive. AWS has barely got Trainium to work and Microsoft is behind. While they will get their stack to dance, eventually, the rate of change or innovation is keeping AWS and Microsoft busy. I don’t see other than AMD (ROCm) and Google (JAX) to be able to match up to CUDA over a sustained period of time.

Own vs Rent: Much as there was a cross over point ($200M in ARR) in which having your own private instance cheaper than using Public cloud (Martin Casado - the trillion dollar cloud paradox). While that economic case existed, but did not materialize due to cost of repatriation, is now emergent in the context of Neoclouds. They are starting with a clean slate and thus more inclined to own the entire cost model, but more important the churn in the ‘systems engineering’ i.e. innovation at various layer above GPU + CUDA i.e . vLLMs, PyTorch, MCP like APIs are happening at a pace, even the hyperscalers are not able to keep up. So for the first time, there is highly distributed innovation that even well capitalized and resource rich clouds cannot keep up with agile/nimble startups and Nvidia is making sure form a supply chain management, there is choice for them and the customer(s).

Public clouds are not going anywhere. They will eventually rally around the following. Their own hardware stack+ Nvidia. All the public clouds will Nvidia for training and their own silicon + stack and Nvidia (and some with AMD)

Handicapping Neoclouds

AIaaS Neocloud: Its the next Meta (along with Meta), Apple and other AIaaS (with or without a device). The handicap part is capital and talent. Otherwise, they can end up being standalone and challenge the public cloud. OpenAI is well on its way along with Meta to be exactly that.

Inference_as_a_service Neocloud: Unlikely that the customers will use these companies (over time) in addition to OpenAI, Gemini, and other LLMaaS from public cloud providers or locally hosted ones. Either they have to go more vertical (up/down the stack) or be acquired, is my guess. I hope to be proven wrong, if this becomes a standalone category.

GPUaaS Neocloud: As stated above, the 100+ will dwindle to 5-10 in the world. There might be sovereign ones like Alibaba and Tencent, EU as a region and India as a region can support a couple (maybe 3).

Enterprise AI: For most enterprises, they need both the traditional cloud like IaaS (VMaaS, K8aaS), PaaS as well as GPUaaS, LLM as a service and ML ops as a service). That is a tall order to pull together all the disparate pieces and variable ratios of CPU:GPU because enterprise workloads are not pure LLM inference (GPU).

Enterprise AIaaS: This category esp. Oracle is here to stay. It has achieved critical mass (capital, operational strength and ownership or trust of Private data). They are going to blur the lines with Private data centers and continue to own on behalf of the customers, their enterprise private knowledge.

The Neoclouds are here to stay, thanks to Nvidia (and soon Broadcom and AMD) supporting both technologically and financially as well as act as a bridge to Private knowledge Clouds. But which sub-category will survive is open for debate. That comes to the last and most important and barely noticed in all the AI-hype to date.

Private Knowledge Clouds are emergent (the sleeper market). There are 3 or 4 properties that make this a standalone category. The last attempt by Google (Anthos), Amazon (Outpost), and Azure (Azurestack) failed. Reasons related to cost but more importantly the operational model. That does not jive with the operational model of private clouds. But with AI, since every piece of known data within an enterprise is useful and relevant be it for search, deep research, automation or any number of enterprise workflows then can use data that can be digitized to improve productivity. That will emerge as a broad category. But the few key properties are

Allow the enterprise to own, secure, manage and operate the data they own, the data that they generate. It will remain as an enterprise IT problem or value

It’s not all LLM compute. A significant portion is relational and structured data. LLMs don’t need to and will never be trained on all of the structured data.

Most enterprise data has compute which runs on x86. X86 will continue to host the compute for Enterprise. The notion of ARM or RISC-V or any other ISA will replace x86 is a lost battle.

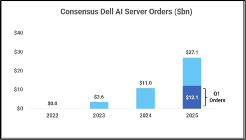

Private data needs modern AI compute to capture the Private knowledge and retain it within the physical and virtual walls. Sure VPCs etc do provide a mechanism to do the same in public clouds, but there is so much data, that it will be compute moving to data and not the other way around. As witnessed by this visual and quote by Michael Dell. Indicator of the growth and opportunity is Dell and its revenue. Dell is going gangbusters with $2B/year of sales in 2023 to $40B of sales in 2025, thanks to Nvidia.

WIth the above issues, the value prop of a neocloud is not as appealing to an enterprise as they have huge investments in compute and data infra and can’t walk away from general purpose compute (x86 specifically) and their workloads are mixed mode (CPU + GPU) in general. The notion of islands of Inference (GPU) clusters and CPU will be blurred. The unit of compute is smaller (32/64 nodes) and it is very price/performance sensitive. At the core its enterprise data and compute on enterprise data. It’s best said as always by Dr. Jesus Jensen ( Good old data processing needs modern compute.

This is a domain where AMD roadmap comes to full force. AMD is well positioned from a silicon and systems architecture to own this market, although Nvidia has the developer and marketing mindshare. This is for AMD to loose, because its gunky, complicated mess of CPU, GPUs, data infra etc etc and incremental capex as opposed to forklift. I see Oracle playing a strong card on private data (Oracle databases) and Nvidia using its balance sheet and investments to play strong. Intel has lost its way, but the recent Nvidia, Intel deal vouches for why x86 is still relevant for Private Knowledge Clouds and the NVlink fusion is a way for Nvidia to grab that space (and kill UAlink along the way, too). (Side note; In 2023 - Jensen said - its all accelerated compute and the world will have to switch over to his view of the architecture. In 2025, amongst many reasons, he has made a deal with Intel). In the Intel-Nvidia NVlink fusion Q&A, he is articulating why he HAD to do a bear hug with x86 infrastructure. It’s better to come from Jensen than anybody else today.

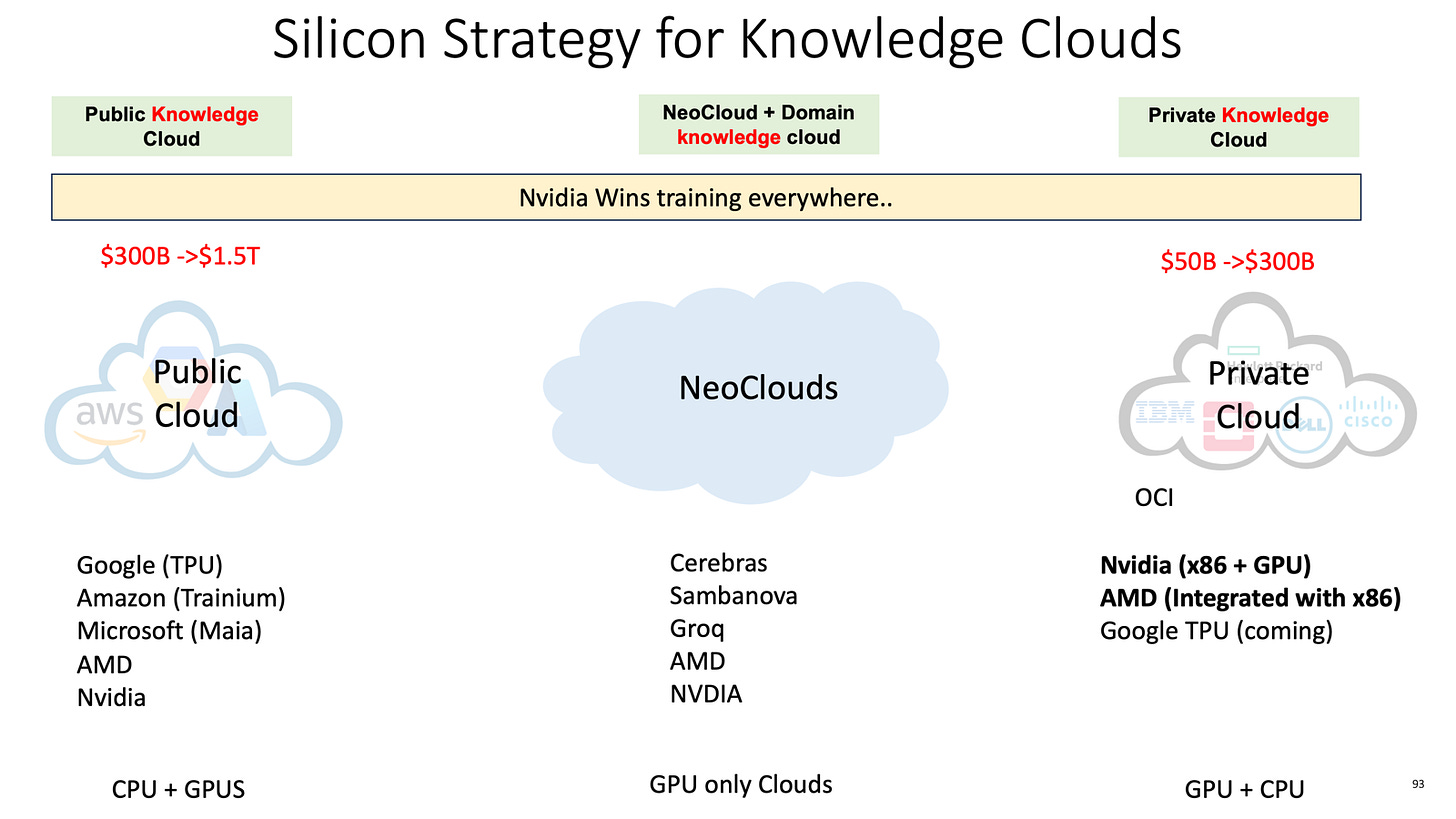

Finally, mapping the current and future silicon roadmaps to the three Public, Domain and Private knowledge clouds, this is the way I see it shaping up

The final summary and takeaways..

The silicon roadmap for the three categories is shaping as above. The middle is a smorgas board, which will shrink over time.

But I wanted to re-iterate what I said at the beginning..

Public Knowledge Cloud will be for the few (Google, AWS, Azure and perhaps OpenAI, Anthropic, Meta…)

Neoclouds are of many types and some are passing clouds, but they will look different before 2030. There will be many, but Domain Knowledge Clouds will emerge as well as clouds supported by silicon providers (Nvidia, AMD and Broadcom) for those who are not in Public Clouds).

Private knowledge clouds - the emergent white space or rather re-imagined in the context of AI and be a category of its own.

Thanks for writing this, it clarifies a lot. The distinction between Public knowledge and Private knowledge realy is particularly insightful. How do you anticipate this shift impacting open-source AI development?

Thanks for writing this, it clarifys a lot, especially the shift to knowledge as the key differentiator. What if the emerging 'private knowledge clouds' create new societal divides in access to vital information and AI capabilities?