History of Dot.com

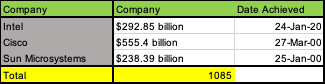

Would we be at the same point in March’2024 as we were in March’2000? The peak of dot.ai w.r.t market valuation for some incumbents as the shift happened for infrastructure from the old guard OEMs to the cloud players? Just going back in history, at the peak, the market caps of Intel (Silicon), Cisco(networking) and Sun (Compute) totaled $1T.

Nvidia market valuation is the same (coincidentally) as the peak market cap of these three as it has a chip business, a systems business and a networking business. It took 23+ years to get here. But lets go back in time before March’2000. The seeds of the shift was put in place by 1998.

1998 was the year Sun launched E10K ($1M server) and Ultra 5 ($1000 workstation/server) - both were the workhorses of then emergent dot.com - every internet company used Sun hardware until it was not. The fish was falling into the boat. Many ML/GPU fanboys of today were toddlers or yet to be born.

1998 was the year Intel came from behind (TI and IBM) on process leadership. Then, it was 0.18uM and Coppertone for Pentium IV that boosted the clock rate marketing. But it was a real 2X in FOM relative to Intel’s compatriots (TSMC then was not even in the picture for high performance logic).

1998 was the founding year of Google, VMware and Equinix as I have mentioned before.

The dot.com peak was the market shift in multiple ways. To name the top four

Tech Shift: From Scale-up (Enterprise ERP & HPC) to distributed systems (cloud). Emergence of multi-core and threading was co-incident. A new compute paradigm.

Business model Shift: Capex (OEM HW sales) to Opex (Cloud - happened in reality in 2007, but seeds were sown in 2000).

App Shift: 3-tier Enterprise to SaaS. The Java stack (J2EE) gave way to LAMP Stack. Java while ubiquitous and open, was subsumed by the truly open - LAMP.

Open vs Closed: Open Source emerged as a key GTM channel and changed the landscape as Open Source become more popular! And as I have said before AWS benefitted most. Thus the backlash lately.

There are 4 questions that come to mind.

Are we at the same point as in March’2000 - with the shift today with dot.ai? i.e. Nvidia is the sum of Cisco, Intel and Sun from back in 2000. i.e. is it a near term peak before the real AI exponential kicks in?

Who will be left behind with this shift if it happens and what will Nvidia look like after this transition. Is it going to be similar fate as one of Sun, Intel or Cisco or different?

What is likely to emerge that we have not seen yet!

Where will we end up in this Open vs Closed debate/bifurcation?

The shift today is how infrastructure will be built (Scaleup to Distributed to a hybrid scale-up/scale-out) and how infrastructure will be delivered. Now in 2023 - Intel is facing the same challenge as back in 1997 - trying to bring back process leadership. Seems like its 2024 with 1.8 nm node as Pat has repeated again and again - 5 nodes in 4 years.

But lets start with Nvidia.

Nvidia today is at $2.6T in market cap and firing on all cylinders in delivering compelling solution for all layers of the infrastructure stack. Silicon, sub-systems, systems (DGX) and cloud.

Its worth considering ‘wisdom of the crowds’ i.e. the broader market is valuing Nvidia as sum of these three (silicon, networking, systems) and as a cloud player as well (DGX cloud). Even getting ahead of itself by positioning Nvidia as the category for the new emergent cloud. Reference my other blog here on Cloud vs Fabs. Ashwath’s valuation analyses is a good baseline. Jensen his work cut out to meet the crowd’s expectations.

Nvidia has many potential moats as well as potential ‘sink holes’.

The Moats

AI is a tsunami Nvidia has helped create. Thus going forward, it has the potential for winner takes all and has the tail wind for timing.

Full systems thinking - having best of breed at all layers of the stack relative to its competitors, including the big three public cloud and even facebook.

Jensen (has been and will be) a moat as a persona and the company culture he has built (nimble, agile, focus and attention to detail and accountability).

CUDA and developer eco-system.

If the future has closer integration between AI, gaming, ‘metaverse’ and raw HPC, he has a chip level architectural moat. [Personally, I think the real value part of the market - which is AI - just needs killer price/power/performance for linear algebra - but that is perhaps less obvious for most - Groq and TPUs are good ones to study and compare - its triad of memory capacity, memory BW and then flops).

The Sink holes

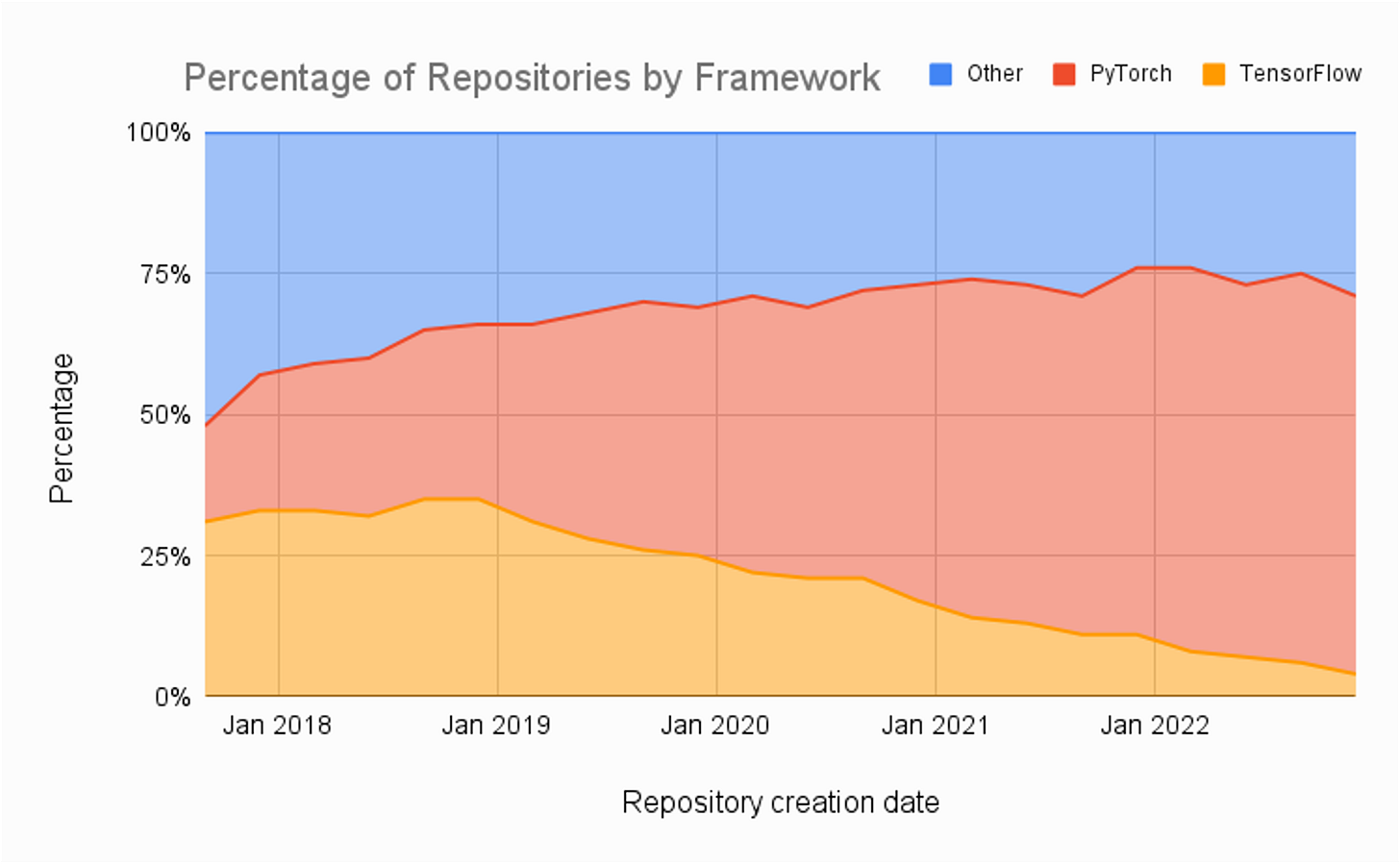

Open vs cloud (then it was Solaris vs Linux - now its CUDA vs PyTorch - while not the same, but similar). Clearly PyTorch is making inroads like Linux did between 1995 and 2002. We are in 2023 the same state as 2000. PyTorch (and the associated run times) runs on Google TPU (not CUDA). Recalling from 1993, Intel shared a slide - every major OS runs on x86 (Unix variants, DOS, windows, early NT, BeOS, and many more except for MacOS). Asserting x86 is the common denominator. Today every major silicon supports PyTorch. Not CUDA.

Competition - Are the cloud guys a friend or foe. Both - but increasingly with Google and its TPU, Amazon making deals with Anthropic for inferentia and Trainium over Nvidia GPUs and potentially Microsoft’s Maia, Cobalt, Athena, looming in the horizon. If Sam Altman (OpenAI) did not believe in Nvidia’s roadmap or work closely with Nvidia instead raising funds to do his own silicon exposes a weak underbelly for Nvidia especially as PyTorch (Triton) becomes the unifying layer for most developers across multiple platforms.

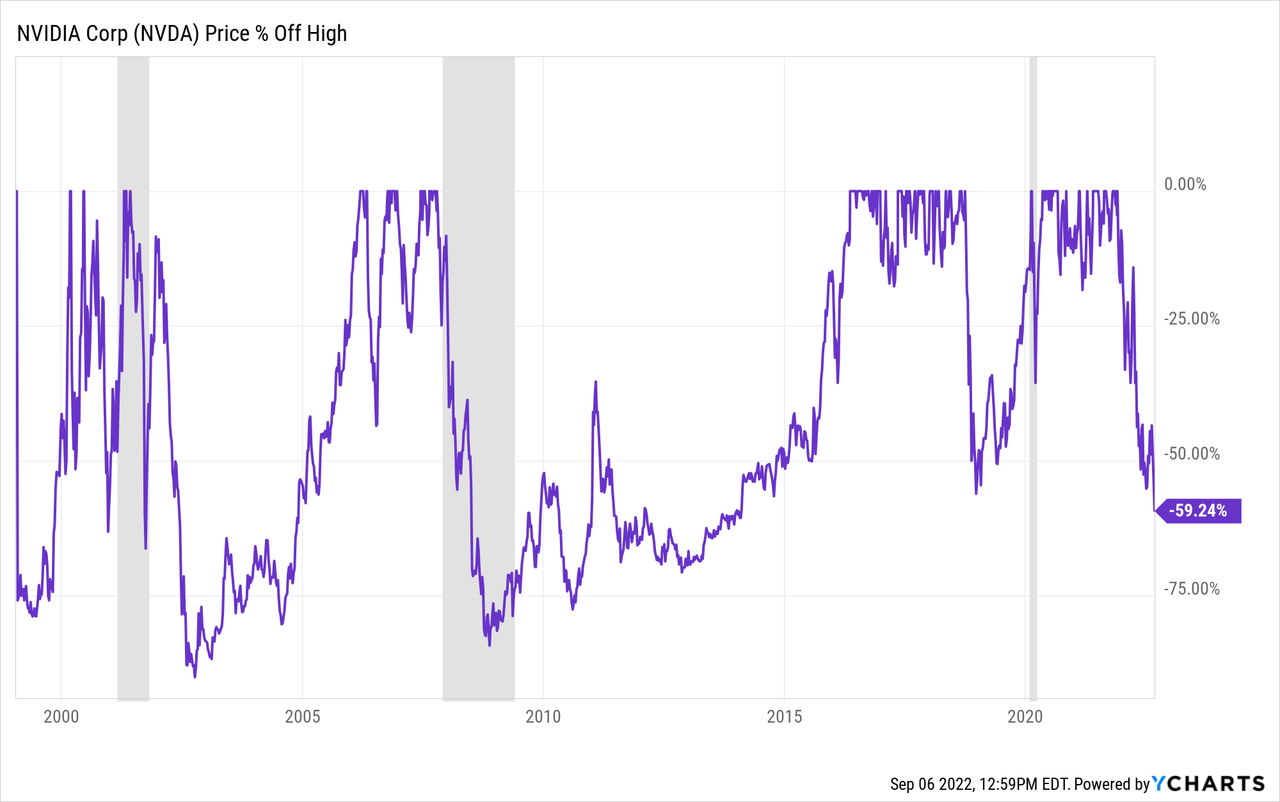

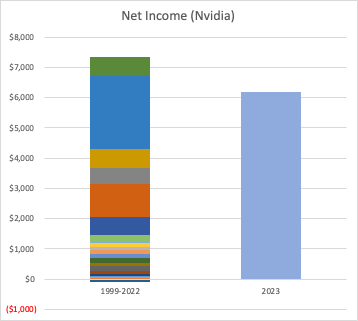

Financially, Nvidia has been spotty. It has moved from market to market - Graphics cards to rendering farms, to gaming to bitcoin to now AI.

Nvidia has made all its money in the last 9 months as much as it has for the last 20+ years - approximately $7B. Make hay while the Sun shines ?

While the table belowq is not a fair comparison of 3-4 years leading upto to dot.com bust to current scenario as dot.ai is unlike dot.com, but worth seeing the pattern if any.

Financially, Nvidia does not have a sink hole as Jensen shoring up cash like crazy. At the same time neither did most think of the same in 2000 w.r.t Intel, Cisco and Sun.

Nvidia is playing Round Tripping based on all accounts. See below for money flow. Of the $25+B capital flowing for funding cost of compute for GPUs, 80+% is Nvidia’s garden wall (except for Google). Semianlaysis explain GPU cloud economics eloquently here.

Training vs inferencing: This feels like Video encode vs decode but perhaps 100x or 1000x in scale. Specialized hardware for encode, but range of software to hardware for decode. Is it similar here (except for scale?). The total compute dollars for inferencing like video decode will dwarf training? Will it?

Don’t count out algorithmic, system and compilation improvements to scale compute down to your laptop. While training will not be hosted in your PC or mac, certainly there is 10x-100x gain at all layers of the stack as we see the Mistral 7B parameter model performance.

If there is any bump in demand, it will cause a rippling effect across the entire Nvidia value chain as his model is based on demand outstripping supply. Certainly there is competition, there is algorithmic improvements for cost of inference , bifurcation of demand between foundation model training vs serving, the market adoption of LLM needing more time than current hype cycle and many more.

Nvidia’s performance the last 5 years relative to its big 3 (Broadcom, AMD, Intel)

Intel

Intel is a dark horse. Everybody has counted them out, which is a good place to be (its only upside from here). For those who were not born before in 1998, let me share this reminder…

The Upsides…

Intel - Don’t count them out. They have come back from process, arch and SW leadership. This is a 3-5 year game before you can call the game.

To remind most people, Intel did not have the leading edge CMOS process through bulk of 1990s. They caught up in 1998 (0.18uM) to be specific (as my projects with TI faced the assault on xtor FOM improvements by Intel back then). The above visual is a historical reminder. Sun and TI embarked on pure CMOS starting in 1991 and were on a competitive roadmap till 1998 (co-incident with that time period - shipped 4 world class leading microprocessors - First CMOS SoC, first 64 bit server CPU at volume(MIPS was ahead in time, but smaller in size), first Glue-less SMP and First dual core microprocessor). ALl of that did not matter due to shift from Solaris (Closed) to Linux (Open) - i.e. the architectural shift happened between 1998 and 2003. 2024 is the year of PyTorch reaching an inflection point in support more than 1 accelerator. The Tsunami wave is ahead of us.

PC AI: There is AI in the cloud. Then there is AI in your device. That is as big or bigger market TAM. For both Intel and Apple.

Intel has the largest OSS developer investment at silicon level (largest contributor to Linux).

IFS could be an upside in many ways. xPUs does not have to be standard products. It could be custom versions for big customers.

The past 9 months…

The downside. While there are many

The culture of execution is not the same as the 1990s and lots of work ahead to put that back (work in progress)

Speed/agility (compared to Nvidia), full systems thinking and supporting diverse stakeholders

Intel’s performance relative to its 3 competitors (BAIN) the last 6 months.

AMD

There is not much for me to add here. Seems like on both the CPU and GPU/xPU - AMD’s strategy is purely focused on taking market share by sheer focus and execution.

But AMD has good pragmatic engineering on both the CPU side and GPU side. Much to be done on software. Looks like that gap might be closing with the help of Meta, Microsoft and OSS community. Still by measure of skilled/trained SW engineers in AMD vs Intel, Nvidia, AMD lags. This gaps needs to be addressed to be competitive.

Broadcom/VMware

Too early to tell. More questions than answers for now. Will skip for later. Certainly is the second most valuable chip company and is getting some of the AI aura.

The dot.com vs dot.ai similarities

Scale, cost and availability caused the shift from Scale-up to scale-out or distributed systems. Will it happen again? Feels like its the case, but its small scale scale-up with at DC scale scale-out. DGX is a good reference pointer.

Emergence of new infrastructure - public cloud. Like then - do we see the emergence of AI Cloud? Cloud vs Fabs thesis.

If market cap is a proxy for success (not always) the 5 year and 6 month comparisons for BAIN (Broadcom, AMD, Intel, Nvidia) is interesting.

Net new workloads (SaaS) creating the new stack. Same this time? Seem like this time its ChatGPT, Accelerators, Models, PyTorch (the new CAMP ?)

There was round tripping then too,

The past 50 years, thanks to Moore’s law marginal cost of compute going to zero has been the trend creating new categories (Microprocessors, Internet, Social, Mobile to name a few). The alternatives for driving cost down are coming…..

Venture dollar pouring in - but it was cost of customer (CaCC) acquisition then, but its cost of compute (CoC) this time.

DIfferences between dot.com and dot.ai ….

The AI market is 10x-100x between dot.com and dot.ai. The sheer size gives more TAM and SAM available to be standalone. AI is changing work and that is a multi-trillion dollar TAM.

The gap between the boom and the bust was long then - perhaps 3-5 years. But at the rate at which technology adoption is going today, there will be a boom bust boom cycle, but the time constants are probably lot shorter. Just need to have enough cash for the rainy day, that some did not see or did not have enough cash to ride the down cycle.

As the dot.com tidal wave receded, it wiped out RISC microprocessors and a few OEMs (SGI, Sun, DEC, IBM) and left us with x86 (Dell, HPE, Lenovo + ODMs) as the dominant server platform(s). There will be some casualty this time too, but silicon companies are emergent with the four (BAIN) than receding back then.

Once demand for training hardware beyond the Magnificent 7 (Google, OpenAI/MSFT, Facebook, AWS, Baidu, Anthropic, Mistral) is built out, is there a demand for training hardware as they are the only ones financially and technically structured to build foundation models?

Jensen as the leader for Nvidia and having the founder stamina is creating a new swim lane for himself with DGX cloud. Cash flow and investments all point to Jensen creating a new swim lane for Nvidia. There was private cloud, then came public cloud and now there is AI cloud.

There was a second wave after 2001 dot.com bust (Circa 2007 was the knee of the exponential), when Social (FB), mobile (iPhone) and cloud (AWS) all happened. We could see a correction (not a bust) in 2004, but 2005/2006 might be the next wave or never see a bust cycle. 2024 is going to be an interesting year.

Some other gentle reminders…

Don’t assume its GPU centric world and don’t assume all the value is in the chip.

Don’t assume LLM is the end game for AI. Its the beginning and its a brute force approach to computation and memory utilization. Many step functions in gains are still ahead. As we tackle memory placement and optimization, the need for compute will shrink. Today compute is over provisioned w.r.t memory for LLMs.

Don’t assume winners in round 1 necessarily survive through the 2nd wave. New players will emerge.

In closing…

Going back to the 4 questions I asked

Is the same as dot.com? The simple answer is yes + no. There are some aspects that are similar (hype, roundtripping, shift in ‘cloud systems’, out with the old and new entrants) and some that are different (AI/workload, cloud shift, systems arch, Open vs closed)

Who will be left behind and the math to support that. Certainly the OEMs will face more pressure, especially with the emergent AI cloud players and the new operating model. The chip where consolidation has already taken hold are likely to he biggest beneficiary with Nvidia being the lighthouse of the 4 BAIN (Broadcom, AMD, Intel, Nvidia).

What new players or categories are likely to emerge. There have been many predictions. There are smarter folks projecting, but invariant to all, these I hold will be true

I do think semi companies BAIN will hold their own against the cloud

The consolidation of the cloud computing that happened the past 15 years, we will actually see some diversity along geo-political needs as well as workload needs - vertical AI clouds.

We are yet to see new layers of the cake and category makers. As Chamath said this week "- on future value creation opportunities based on generative AI: “Most of the money made off the invention of refrigeration wasn't by the makers, but companies like Coke. If AI/LLMs are refrigeration, who is next Coca-Cola?”

Open vs Closed. Interesting dynamic between OpenAI, Google, Facebook and LlaMA, Mistral vs closed models. Open source/model will win eventually. Can’t say when and how. But 2024 seems like the inflection point.

If you are interested in penguins and LlaMAs…You can literally buy this Penguin on a LLaMA from Amazon